BERKELEY, California – A group of researchers has partnered with the Anti-Defamation League to fight online hate speech by teaching computers to recognize it on social-media platforms.

The Online Hate Index project out of the D-Lab at the University of California, Berkeley, aims to identify hate speech, study its impact and eventually design a plan to counteract hateful content.

Using artificial intelligence, teams of social scientists and data analysts are working to code programs that can search through thousands of posts looking for malicious content, said Claudia Von Vacano, executive director of digital humanities at Berkeley. The program correctly identifies about 85 percent of hate speech, even though the project is in its early stages.

The software is used in connection with a problem-solving lab of experts, helping companies to navigate the line between protected free speech and content dangerously targeting marginalized groups, Von Vacano said.

Von Vacano and Brittan Heller, director of technology and society for the Anti-Defamation League (ADL), started the Online Hate Index in 2012. It began by targeting hate speech on Reddit, the popular web forum. The project attracted interest from such companies as Google, Twitter and Facebook, which formed partnerships with the ADL and the D-Lab, and plan to use the online hate index on their platforms, Von Vacano said.

Daniel Kelly, assistant director of policy and programs for the ADL, said the organization began fighting online hate in 2014, when it released guidelines for companies hoping to limit damage done by extremists online. He called the Online Hate Index an innovative project that’s designed to target aspects of online hate that have been overlooked by similar studies.

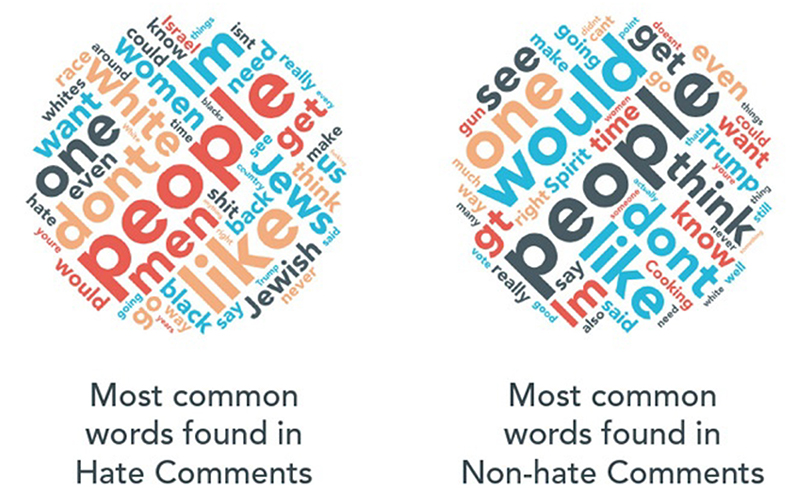

“What we are doing is using machine learning and social science to understand hate speech in a new way,” Kelly said. “We are taking it from the perspective of targets of hate online.”

The project aims to be transparent by lifting the “black veil” when it comes to data and analytics from social media companies, he said. Many companies keep their data and statistics private when it comes to terms of service and user policies. One of his main concerns about data coming from these companies is that the ADL and D-Lab don’t know whether these policies incorporate the perspectives of marginalized groups affected by them.

To build their research teams, the D-Lab and ADL recruited members with diverse perspectives and backgrounds, including different ethnicities, genders, academic fields and perspectives, said Von Vacano, who is also in charge of recruitment for the Online Hate Index.

“Our linguist, for example, is delving deeper into issues of threat,” she said.

One of the biggest challenges faced by the teams was defining the intensity of statements made by Reddit users, Von Vacano said, because hate speech is not clearly defined.

To solve that problem, the ADL and D-Lab use a scale to characterize posts. At the first degree of biased posts, someone might hint at hateful opinions. Next, hateful content may become dehumanizing to a whole class of people.

The most extreme examples of online hate are direct threats to individuals. Examples of online threats include doxing, where people with malicious intent publish personal information, including a home address or phone number, that puts someone in harms way and leaves them vulnerable to unwanted attention.

“Going into the project,” Von Vacano said, “we kind of naïvely thought that we could ingest large amounts of text and, at the other end, say on a binary level ‘This is hate … this is not hate.’

“At this point, we have a much more sophisticated understanding of hate speech as a linguistic phenomenon, and we are really dissecting hate speech as a construct with multiple components.”

The first stage of the project was completed in February; more information can be found on the ADL’s website. Stage 2 is scheduled for release this summer.

This story was produced by the Walter Cronkite School-based Carnegie-Knight News21 “Hate in America” national reporting project. Follow the project’s blog here. The full report will be released in August.

Follow us on Twitter.